6. Machine intelligence Amplifies “the meta” of the things we make

When creative work is made for an audience to use or interact with, the line separating creative works and creative tools becomes blurry, and the roles of tool user and tool maker start to overlap. Examples from today’s creative landscape suggest these overlaps are helping cultivate the expansion of the creative process. Observations of machine intelligence highlight its role within the overlapping “meta”.

Move + See: Creative works that become creative tools

“Art Through Technology”, was a pop-up exhibition included in the 2019 Frieze New York art fair.1 As visitors arrived at the space, a large, black, vertical screen welcomed them. Displayed on it, was a floating, white, spring-like shape—animating in both vertical and horizontal directions.

Where first glances might have deemed the on-screen movements of this object as random, stepping in-front of the glass revealed its life-source… you. Printed on the wall above the screen, were the words “Move + See”.2

Later, on Twitter, the piece’s creator, Zach Lieberman, posted visuals of people interacting with the display. Here, the shape can be seen twisting and growing in response to its human collaborators; Some choosing to move slowly one limb at a time, while others jump and wave wildly. Lieberman also commented, “[P]eople dismiss interactive mirrors but I think it’s powerful to see your body transformed in new ways, to be reminded that you have a body and to explore the expressive space of gesture through augmentation. [With computers] we mostly just click, type, and swipe etc.—feels good to really move”.3

Lieberman’s mirror is a helpful example of work that’s made to exist as a creative system. It has no definitive form and is seen by everyone differently. Furthermore, the behaviors and rulesets it follows are directly tied to its viewer. As a result, this interactive canvas leads us to the same question our reimagining of creative assets and file formats did: “is this a creative work or a creative tool?”

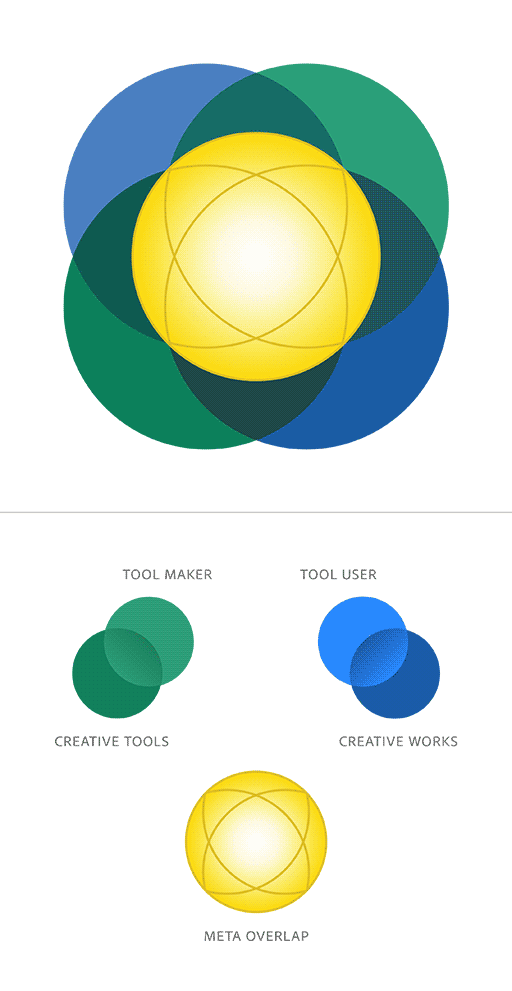

“The Meta”: An overlapping space for Tool Users and Tool Makers

Truthfully, the point of this question isn’t the answer, it’s the overlapping space our attention is drawn to when the question gets asked. Although we might typically categorize that “Tool Users” make “Creative Works”, and “Tool Makers” make “Creative Tools”, in the overlap these relationships mix. Within this “meta” space, an artist can create work that’s in-fact a tool for others to create with, a viewer can assume the role of an artist, a creative asset can function as a creative material, and a tool user can operate as a tool maker.

Today, interactive works that step into this overlapping “meta” are commonly powered by programming and code. Indeed, Lieberman himself opens his Twitter bio, “I make art with code”.4 But this concept of a “meta” relationship between creative works and creative tools isn’t restricted to the world of custom-built interactive art. In fields such as graphic design, creatives are tasked daily with the making of assets intended to be used by others. A brand identity, for example, is a creative system built to flexibly guide the application of logos, color, layout, typography, and more.

Similarly, creatives are known to also make for each other, often—sharing reusable assets, templates, and themes, or packaging presets, actions, and processes for their peers to make use of. Popular online platforms and blogs are a constant stream of new resources, where all disciplines from web development to illustration are well served.5 Even if a creative only thinks of themselves as a tool user, the act of making something that’s intended as a resource for others, sees them venturing into the overlap and the role of tool maker.

Some creators can even gain such a following for their tools, that tool maker can become their primary occupation and creative focus. Kyle T. Webster, is an award-winning illustrator who did just that…

Tool User to Tool Maker: An interview with Kyle T. Webster

KyleBrush.com launched in 2013 as a marketplace for the custom Photoshop brushes Webster had been creating alongside his professional illustration practice. But despite a portfolio that included work for TIME, The New Yorker, Scholastic, Nike, and The New York Times, the quality of KyleBrush products was hard to ignore. As the brushes grew in popularity, they quickly established themselves as an industry-standard among artists at the likes of Pixar, Disney, Cartoon Network, and many more.6

Adobe acquired KyleBrush in 2017, and Webster’s brushes are now available to all subscribed Creative Cloud users. Currently, Webster works as a Senior Design Evangelist for Adobe, and is still an illustrator, educator, and brush maker.

The following is an interview with Kyle, discussing his experiences as both a tool user and tool maker, and how exposure to both roles has shaped his creative approach:

Archie Bagnall. Do you remember the first ever custom brush you created? How did it come about, and how was it created?

Kyle Webster. I was working at a design firm, and we had a client CEO who was very interested in having a painted annual report, but gave only two weeks to produce it. I told my boss, “I think I could fake this in Photoshop”. I opened the Photoshop brush settings panel and played around until I was able to create what appeared to be a more natural brush stroke. It was very simple, but I did it – made this set of paintings and we produced the annual report. I did the whole thing with that one brush, just resizing it up and down. At the end the CEO asked if he could buy one of the originals and we had to confess that we didn't have any.

AB. How did you go deeper into the world of tool making? Was it overwhelming on a technical level?

KW. I had no idea what any of it did. I just knew that there was the brush settings panel and I had never played with it before. Those first tools were not advanced in any way; I would open the panel when I had nothing else to do and I would just play and learn more about making brushes. It was fun for me, which I think is a huge important part of the equation. As I kept going and it got more serious, I never ever stepped outside of the engine I just maxed out what was possible in the panel. I was just sort of figuring out ways to cheat it and trying to do things it was never intended to do.

AB. Throughout this journey did you always think of yourself as “an illustrator who made brushes”, or was there a moment you recognized two distinctive sides to yourself; one that was an Illustrator and one that was a Tool Maker?

KW. I was Kyle the Illustrator making brushes for my own purposes for a long, long time. It was an exciting and fun thing for me to do because I could work in different styles to help make clients happy. But there was a shift in 2014 when other people started calling me a Brush Maker. I didn't ever set out for that to happen, but when I noticed that it was happening I embraced the role and took it very seriously. In the last few years of running my personal business, what was originally a balance of 80% illustration, 20% brush, had become 90% brushes and 10% illustration.

AB. As that balance in how you were spending your time shifted, did you feel like your process as a creator was shifting with it?

KW. There was a huge change, and you can trace it over time if anyone were to look at my portfolio. I used to work in a very specific illustration style Art Directors could recognize as being “oh that's how Kyle draws”. But when I started making the brushes, what wound up happening was a brush would actually allow me to work in a completely different style, and then develop and evolve that style into something that was marketable I could use in my illustration work. It allowed me to have a lot more fun with my job: to experiment a lot more with style, to do painting and drawing in different ways, and to do more shape-based work. My portfolio became this collection of work where none of it looked like the same artist had done it. It goes completely against conventional wisdom when you go to art school. They tell you “you must lock onto a way of working so you can market your work and art directors won't be confused”. The opposite happened for me. I started getting jobs outside of the editorial and illustration world—advertising jobs, book jobs, and comics stuff—because I had this varied portfolio of work. And so, to this day, probably the most fun thing for me to do is just sit down, grab any random brush I've made, put down a few marks, and then respond to what I see and let the image just kind of evolve from there. I have no plan really in mind, I just put something down and then start playing. It's kind of a whole new way of working that I just never would have discovered without making all these tools.

AB. Did you see that same approach of exploration and response reflected in your brush making too?

KW. All the time! A good half of the brushes I made were a response to a brush I'd already made, or that I would see doing one thing and have an epiphany of, “oh what if it did this as well” or “if I change this it will become a whole other tool”—and still that's how I make. I'll also add it's cool to see other artists using the tools. One of the best things is seeing a tool you've made being used in a way you didn't intend for it to be used. I'll have brushes go out, and people say “this is great for doing {fill in the blank}”, and I'll say “Oh I never thought of that, that's cool”. They wound up giving me ideas for what to build, and I could build things that artists wanted. And that was really neat to become that guy who could help out the community, it changed to me becoming a sort of resource.

AB. Your brushes are well-regarded for their ability to emulate real-world materials. After sharing your experiences of overlapping the roles of Tool User and Tool Maker, I’m curious if you have thoughts on the relationship between digital and physical? Does your work exist differently in those two spaces?

KW. With traditional media I go one of two ways: I either do a lot of planning up-front because I know I can't make mistakes, or I just throw caution to the wind and just start messing around—but I have to be okay with the results not being good. With digital media, the person who put it best was the artist Christophe Niemen, who said something like, “The best thing about digital painting is it takes a traditional process and makes it 100% reversible and more flexible”. That's really all digital art is in a nutshell. The reason it's so great is that any decision you make you can undo, and the flexibility is just endless. I'm definitely more experimental, more reckless, more… I'm just free in a digital environment. I think that worry-free creative space is where a lot of my best personal work actually winds up coming from.

An absence of machine intelligence

It’s worth noting at this point that machine intelligence isn’t actually present in the project examples of Lieberman or Webster. But arguably this is a helpful fact…

There’s a version of these essays where machine intelligence is offered as the catalyst for radical change in all creative behavior, but creatives are left unconvinced by a vision that feels too disconnected from their present reality. However, Lieberman, Webster, and perhaps all creatives who’ve ever packaged work in a form intended for others to interact with, have shown that this ‘meta’ space, where creative work and creative tools overlap, is already available for the creative population to step into.

But if that’s the case, then where does machine intelligence play a role in this “meta” narrative?

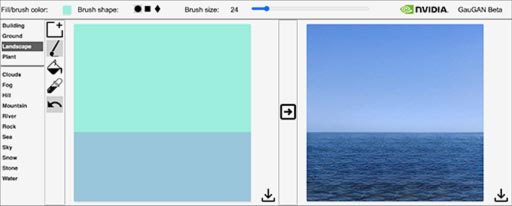

Data, patterns, and behavior: Madeline Hsia on machine intelligence as a creative material

In early 2020, Madeline Hsia, an Experience Designer at Adobe, was experimenting with an open-source machine learning model capable of generating photo-real images. The model [a GAN called “SPADE”7] had been trained on an open-source image dataset [called “COCO”8] that was assembled from publicly available images posted to the website Flickr. The dataset’s creators had also hand-labeled the 330,000 images, identifying almost 200 different materials and objects for the model to observe during training. SPADE was the result, and it was now available to play with on the web through a basic painting interface.9

The workspace consisted of two side-by-side canvases, and the library of GAN-trained materials. On the left was the input canvas, where users could paint in bright solid colors to communicate which materials should be generated where. On the right was the output canvas, where the photo-real image produced by the GAN was displayed. By painting on the input canvas, users could see on the output canvas how SPADE would interpret and depict their scene in photo-real pixels.

After playing in this creative space, Hsia documented some observations from her experience, which are shared below. In particular, she brings attention to moments where the output image was unexpected—inspiring a deeper consideration of GANs as a creative material:

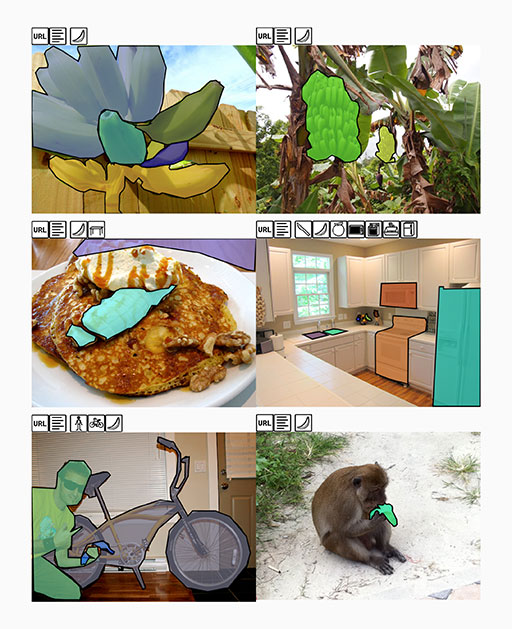

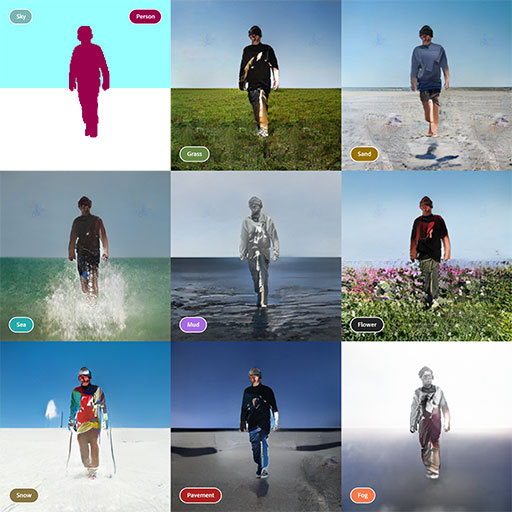

“Where some generated images align quite well with our human expectations of that scene, other don’t. Why is this?

The ‘experience’ of the machine is essentially its training dataset. A GAN trained on photographs learns the underlying patterns that make up an image, which it uses to consider the shapes, labels, and the contextual relationships between elements. But it has no further understanding of what is actually possible in the physical world. The generated images are simply made out of visual patterns learned from thousands of examples.

Training datasets, despite consisting of real photographs, are not aligned with anyone’s particular experience, and might even defy commonly expected reality. For example, the COCO dataset used to train SPADE is incredibly diverse: The ‘banana’ label includes anything from chopped banana, bunched bananas, single bananas, super-zoomed-in bananas, to even blurry bananas. All of these bananas in COCO are then recognized and annotated by humans for the machine to learn.”

What the machine learns are the patterns within the training data—patterns shaped by where the images came from, the intent and unique perspectives of thousands of people who originally uploaded them, and then, by the people who curated and cleaned the data. Most of the time, today’s training datasets are a unique amalgamation of public images that ended up online.

A GAN trained on photographs can learn many patterns that are obvious to humans—for example, that water produces reflections. But it can also generate outputs that make no photographic sense, revealing patterns within the training dataset that humans could not have found and cannot immediately understand.

We usually think of patterns as something instantly recognizable and intuitive, but when it comes to pattern recognition through a huge neural network, we should consider the existence of novel patterns. To interact with GANs as a new type of material is to pay attention to novel patterns in a way that not only deepens our understanding of machine learning and data collection, but also allows us to internalize and inspect an alternative view of the world that is only available through machines.

Reflections from “the meta” for new ways of working

Despite working with very different technologies, Hsia and Webster arrive at surprisingly similar conclusions from their experiences. For both, exercising an intentional awareness of how their materials and tools influence each other reveals the “meta” in a way that could be described like facing two mirrors at each other. The created effect is a visual feedback loop, where a mirror reflects a mirror, reflects a mirror, and so on… or where creative tools reflect creative materials, reflect creative tools, reflect creative materials… To look at one is to also see the other, and therefore to change one is to also change the other.

What’s especially noteworthy from these accounts is how “the meta” inspires new approaches to creating. In particular, Webster’s description of a more iterative process appearing in both his brush and illustration work, echoes our themes of the next creative wave and examples of expanding creative process, reimagined file formats, and an evolving creative landscape. For Hsia, the data-informed behaviors of machine intelligence draw attention to “the meta” and solidifies a creator’s presence within it. Even the most basic use of a GAN to generate output produces signals that tell us about the make-up of that tool and the data that informed it.

On a future creative landscape that’s embedded machine intelligence into its domains, creative tools and materials are made meta. This drives overlaps in tool using and tool making, where materials can inform tools and vice versa, and both are available to be creatively reshaped.

A prompt for self-reflection

Within this analogy of a mirror feedback loop, experiencing the effect first-hand requires us to stand between the two mirrors as they face each other. In doing so, we see ourselves in the infinite reflection. Perhaps this means creatives are also subject to some reshaping in the next creative wave…

Further thinking

Ask yourself or discuss with others

- Have you ever seen or played with an interactive creative project? What was the piece and how did you experience it? Can you describe how it worked? How did it feel?

- Have there been times in your work where you’ve felt like a “Tool Maker” instead of a “Tool User”? What was the project? Did you approach it differently compared to the things you normally create as a “Tool User”?

- Madeline Hsia describes the process of constructing and labeling a dataset to train a machine learning model that generates images. If you could train a model to generate images, what dataset would you construct? What would it include examples of? And what output would you want it to lead to in the trained model?

- What do you think about a future where there’s more overlap between creative works and creative tools? How might your work be different?

References

- “Watch: Zach Lieberman, ‘Another Copy 6’.” Frieze, 6 May 2019, frieze.com/video/watch-zach-lieberman-another-copy-6. Accessed 30 June 2021.

- @zachlieberman. “Come find me at frieze” Twitter, 4 May 2019, 6:17 a.m., twitter.com/zachlieberman/status/1124664442535456768. Accessed 30 June 2021.

- @zachlieberman. “people dismiss interactive mirrors but I think it’s powerful to see your body transformed in new ways, to be reminded that you have a body and to explore the expressive space of gesture through augmentation. we mostly just click, type and swipe, etc — feels good to really move” Twitter, 5 May 2019, 8:00 a.m., twitter.com/zachlieberman/status/1125052715732742144. Accessed 30 June 2021.

- Lieberman, Zach. “@zachlieberman.” Twitter, twitter.com/zachlieberman. Accessed 30 June 2021.

- “Free Template Projects: Photos, Videos, Logos, Illustrations and Branding on Behance.” Behance, behance.net/search/projects/?search=Free%20Template&sort=recommended&time=month. Accessed 30 June 2021.

- Whitaker, Lauren. “Adobe Systems Acquires Kyle Webster's Digital Paintbrush Company.” UNCSA News, 7 Nov. 2017, uncsa.edu/news/20171107-kyle-webster-brushes.aspx. Accessed 30 June 2021.

- Park, Taesung, et al. “Semantic Image Synthesis with Spatially-Adaptive Normalization.” ArXiv.org, 5 Nov. 2019, arxiv.org/abs/1903.07291. Accessed 30 June 2021.

- Lin, Tsung-Yi, et al. “Microsoft COCO: Common Objects in Context.” ArXiv.org, 21 Feb. 2015, arxiv.org/abs/1405.0312. Accessed 30 June 2021.

- “NVIDIA GauGAN.” NVIDIA Research, nvidia-research-mingyuliu.com/gaugan. Accessed 30 June 2021.